Volume 36 Number 6 | December 2022

Melody Boudreaux Nelson, MS, MLS(ASCP)CM

Ethical concerns regarding explainability, data ownership, and discriminatory outcomes pose substantial barriers to large-scale AI-CDSS implementation.1,2,6-8 While a rudimentary example, consider bacteria identification on an automated urinalysis analyzer. Can you explain how the instrument knows it is looking at bacteria? Does the instrument reach the same conclusion you would at the microscope? The future is far more complex. At a minimum, the predictive goals of case-specific AI-CDSS could directly impact morbidity rates, screening programs, and institutional staffing.

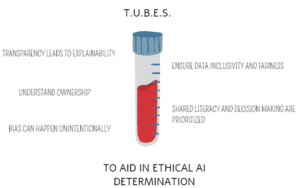

Further, an ethically derived AI-CDSS can reduce the risk of diagnostic error. The TUBES technique can be used in determining ethical suitability of AI-CDSS from industry partners or homegrown solutions. (Figure 1)

Transparency requires all functional components of the algorithm and the system’s behavior have the capacity to be understood.1-2,6 Clinical laboratories should have the ability to explain why the AI-CDSS determined any programmed indicator. Ideally, decision point identification (either by human or non-human means) at the specific patient level would be possible.2

“Black-box models” (BBMs) contain elements that cannot be evaluated or explained.2 Machine learning or artificial neural network models can hone their decision-making skills through active learning.1 Thus, the ability to explain what the algorithm has learned or weighted in reaching a recommended intervention can be impaired. BBMs introduce a significant safety risk as outputs cannot be validated or predicted.6 Clinical laboratories should not report unexplainable values that cannot be validated or reproduced by standard means. Moreover, they should inquire about transparency to preserve trust and autonomy with patients and providers.1

In patient-focused care, laboratory results are owned by the patient. An individual’s decision to share their data with AI-CDSS should involve informed consent.8 Sharing data between private and public entities could result in data being harnessed for use in other capacities not outlined in the consent or contracting process.8 Delineation between what a patient consents to and the vast goals of AI-CDSS within healthcare is critical.8 Industry partners should only receive the data truly needed. Verification that the informed consent process includes appropriate language toward the expansiveness of patient data use by private or public entities is paramount.

A common laboratory adage is garbage in, garbage out. This concept of pre-analytic garbage can be applied to how AI-CDSS models are developed. If data in the development stages of AI-CDSS is incorrect, it does not have the capacity to know this. Wrong test values for modeling, under representation for target populations, or lack of diversity impacts how an algorithm performs and, subsequently, introduces bias. Simply put, predictive context is indicated for one population, but not another. Interestingly, when creating thought patterns for AI-CDSS, developers can reflect their own implicit bias.9 How an AI-CDSS was trained, and the data selection process related to the targeted disease, should be understood prior to implementation.

Interventional methods should be inclusive to diverse populations seeking care and discern fairly across all persons. Fairness, unlike inclusivity, is often a downstream process as an AI-CDSS weighs the information it is given.1 For example, race and ethnicity could play a factor in how AI-CDSS determines risk for a genetic factor. A poorly developed AI-CDSS could over recommend all individuals identified within a certain race and ethnicity as high risk and recommend interventions whether they possess the genetic sequence or not. Conversely, AI-CDSS could under recommend the intervention in underrepresented populations.

Clinical laboratories should ask what approaches were taken to ensure data inclusivity and fairness. This includes promotion of access in areas of healthcare disparities. Validation plans should be inclusive of specimens representing diverse patient populations.

Further, AI-CDSS outcome validation with care specialist’s input ensures that algorithms are aligned with care models. An interdisciplinary, proactive approach in AI-CDSS drafting and design will create content that is ethical.1,2,6,8 This involves sharing knowledge across care domains and bringing the unique expertise of stakeholders to the developers. It cannot be assumed an AI-CDSS developer has literacy in diagnostic data. Likewise, if a clinical laboratory is lacking in AI-CDSS literacy, they should seek training.

Most patients lack a data literacy level for understanding explainable, health AI-CDSS.8 This has promoted discussions on whether it is enough to state AI-CDSS was used to generate results or if parameters and underlying premises that attributed to the final recommendation be shared.1,8 Shared decision making is at risk when providers or patients are faced with interventions based on AI-CDSS that they do not understand. Clinical laboratories should adopt standardized procedures for provider and patient communication and educate personnel for consistent messaging. Employment of trained AI-CDSS resources to provide consult may be necessary in the future.

In summation, the TUBES technique can serve as a newcomer’s preliminary checklist when approaching AI-CDSS solutions. Value based healthcare models are here to stay. Clinical laboratories must demonstrate their impact as holistic. AI-CDSS presents an opportunity to engage in advancing healthcare practice and promote the laboratory profession’s visibility while addressing health equity and medical ethics.

If the clinical laboratory does not proactively advocate for its role in ethical AI-CDSS development, another party will fill in the blanks. A reactionary approach may directly impact our ability to practice laboratory medicine in a way that preserves patient safety and the highest levels of laboratory quality.

This article is not an expansive review of AI-CDSS or ethics. It is intended to promote discussion on ethical implementation of AICDSS throughout clinical laboratories.

References

- Chauhan, Chhavi, and Rama R Gullapalli. “Ethics of AI in Pathology: Current Paradigms and Emerging Issues.” The American journal of pathology vol. 191,10 (2021): 1673-1683. doi:10.1016/j.ajpath.2021.06.011

- Amann, Julia et al. “Explainability for artificial intelligence in healthcare: a multidisciplinary perspective.” BMC medical informatics and decision making vol. 20,1 310. 30 Nov. 2020, doi:10.1186/s12911-020-01332-6

- Cech, Laura. “Sepsis-Detection AI Has the Potential To Prevent Thousands of Deaths.” Hub.jhu.edu July 2021. https://hub.jhu.edu/2022/07/21/artificial-intelligence-sepsisdetection/

- University of Florida: College of Pharmacy. “UF Health researchers use artificial intelligence to better predict hepatitis C treatment outcomes.” Pharmacy.ufl.edu Feb. 11, 2022. https://pharmacy.ufl.edu/2022/02/11/uf-health-researchersuse-artificial-intelligence-to-better-predict-hepatitis-ctreatment-outcomes/

- David, Liliana et al. “Artificial Intelligence and Antibiotic Discovery.” Antibiotics (Basel, Switzerland) vol. 10,11 1376. 10 Nov. 2021, doi:10.3390/antibiotics10111376

- Siala, Haytham, and Yichuan Wang. “SHIFTing artificial intelligence to be responsible in healthcare: A systematic review.” Social science & medicine (1982) vol. 296 (2022): 114782. doi:10.1016/j.socscimed.2022.114782

- Gundersen, Torbjørn, and Kristine Bærøe. “The Future Ethics of Artificial Intelligence in Medicine: Making Sense of Collaborative Models.” Science and engineering ethics vol. 28,2 17. 1 Apr. 2022, doi:10.1007/s11948-022-00369-2

- Morley, Jessica et al. “The ethics of AI in health care: A mapping review.” Social science & medicine (1982) vol. 260 (2020): 113172. doi:10.1016/j.socscimed.2020.113172

Melody Boudreaux Nelson is the Laboratory Information Systems Manager at the University of Kansas Health System in Kansas City, Kansas.